Over the next few of days, I’m going to see how far we can get covering the entire professional test development process and showing how these steps can inform the creation of more accurate and challenging assessments for MOOCs and similar high-scale learning programs. Those interested in more in-depth coverage of this subject can check out this book which I contributed to a few years back, or contact me if they want to be pointed to additional sources of information.

To begin with, the builders of professionally developed tests (such as state-wide educational exams, the SAT or ACT, professional licensure or certification exams) begin not by writing a bunch of questions, but by developing a set of goals.

For example, the goal of a college entrance exam is to determine student readiness for higher education, while the goal of an IT certification exam is to find out whether someone has mastered a specific technology.

Sometimes these goals require the development of a “construct” (such as the “construct” behind the SAT which says that language and math skills equate to college achievement). As another example, when I worked on a certification program years ago to measure student Digital Literacy skills, the first several months of that project involved literature reviews and discussions with high-level subject matter experts to determine what we meant when we said “Digital Literacy.”

The ultimate goal of all this research is to create a test blueprint that begins with high-level domains of knowledge and skill and ends with a set of individual exam objectives – ideally ones that can be both taught and assessed.

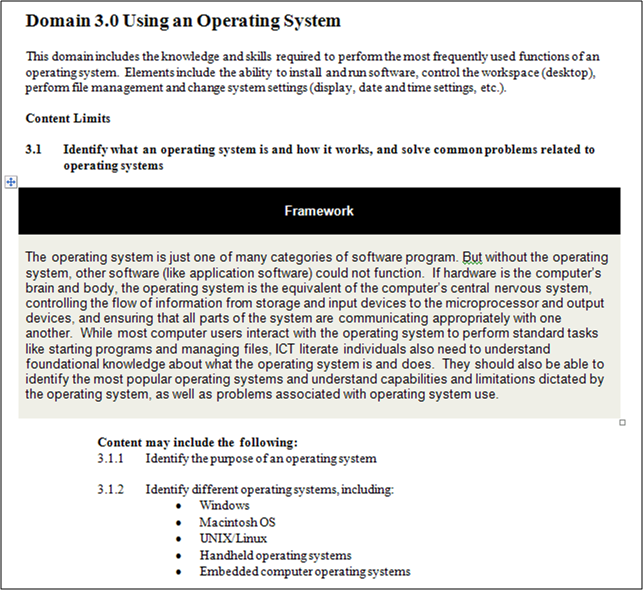

To see what this looks like, here’s a snippet from the testing blueprint for that Digital Literacy certification I just mentioned:

Notice that this outline (which is part of a far-larger test blueprint) begins with the definition of a high-level domain (Using an Operating System) and ends with a set of individual objectives, each of which can be assessed using a single test question.

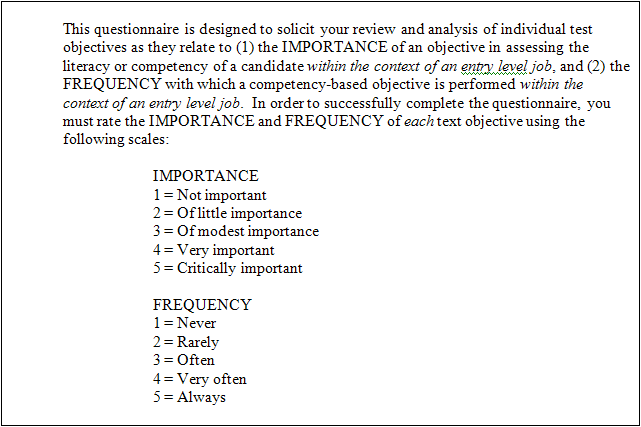

Like the definition of a construct, blueprint design is often the results of months of research which can include the input of dozens of high-level subject matter experts. And once these experts (usually working under the direction of a professional exam developer) create a draft of which objectives should be covered in a test, that draft is then reviewed by an even larger groups of experts who (through focus groups or surveys like the following) get to “vote” on which objectives are most important.

This survey or focus group research results in a set of statistics used to prioritize what will be covered in an exam (for example, survey research indicated that the operating system domain illustrated above should constitute 43% of overall exam content). And once all of this subject matter expert input has been processed, an exam that follows these guidelines is said to be “content valid,” meaning it reflects the opinion of large numbers of experts regarding what should and should not be included in a test that will determine mastery of a specific subject.

As mentioned previously, within a classroom environment the test developer (i.e., the teacher) can substitute his or her own informed judgment regarding which subjects can be included in a test in which proportions for the type of statistical analysis used to make these decisions in a professionally developed exam.

But once you start giving tests that that need to make accurate comparisons between thousands of students working in different locations, times and environments, then the type of statistical work described above is the best method for determining if your exam is covering the right stuff.

Only when this type of planning work has been completed do test developers sit down to begin writing questions. And how they do this will be the subject of tomorrow’s posting.

Leave a Reply